6 minutes

Azure Pipelines: Build a dockerized app and deploy to kubernetes

2021 update: Since KubeSail was not working as expected, the solution is now hosted in Okteto which offers a free namespace for developers to try out a hosted Kubernetes service.

Every company has a different way to work and configure CI/CD, and all of them have pros and cons. I’ve worked with Azure Pipelines before (but when it was called VSTS and the configuration was done in a more visual way and not with yaml files). Now I am currently working with Travis for CI and Flux for CD but I think it is always good to keep an eye on how the competitors are doing and what they have to offer.

For this tutorial, I only had to link my github account to Azure Devops in order to use its functionality. That being said, I did not have to put my credit card or billing information so it is safe to say that it is free, at least for hobby usages.

The tutorial will cover:

- Go application with Dockerfile

- Build and push to Dockerhub (but it could be any other docker registry)

- Deploy to Okteto (which offers a free kubernetes cluster)

The application

You can find it on GitHub . It is a rather simple rest application since the purpose here is not to dig into code but on the CI/CD.

It is runnable (downloading modules first with go mod download) executing go run cmd/main.go.

The only functionality that exposes is a GET /health endpoint which will return a json informing that health is ok.

The application can be dockerized executing docker build -t gwa:local . and then runnable using docker run -p 80:8080 -it gwa:local.

The file azure-pipelines.yml contains the CI/CD configuration but more on that later.

Azure Devops

An account is needed in order to get access on Azure Pipelines. Linking the github account and giving access to the demo repository should be enough, after this step a project should be set up.

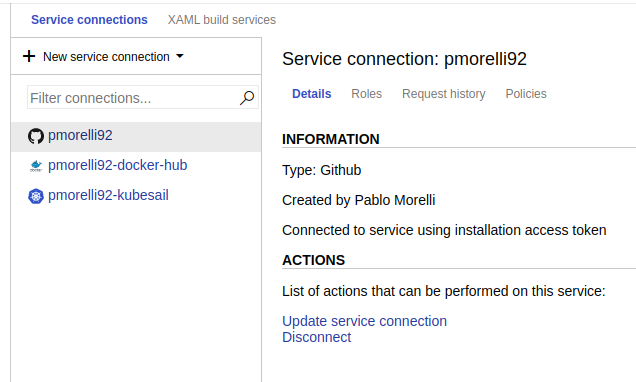

Inside the project, the project settings button should be visible on the bottom left corner. Scroll down to service connections and you should see something like this:

This is where the credentials for docker and kubernetes are stored.

Select docker registry and complete the steps for docker.

For kubernetes it should be enough selecting Kubeconfig and pasting the kube config that lives under user/.kube/config on the operating system. Beware, in case you have multiple configurations, remove the parts that are not related to the cluster in which you want to deploy.

Adding build pipeline

Under the pipeline menu, pick build and add a new pipeline. Follow the steps and give access to the repository (it does not matter if it is public or private). Pick starter pipeline when prompted and proceed to click save and run.

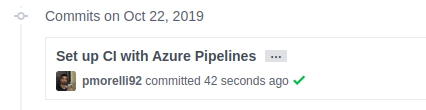

If everything went well a commit should be added to the repository and this will appear on the commit history of the repository:

The ✔️ indicates that the CI process ran successfully, when the build is ongoing a yellow circle will appear, and if the build fails ❌ will be shown.

Configuring CI/CD

Back to the azure-pipelines.yml:

trigger:

- master

schedules:

- cron: "0 0 * * *"

displayName: Daily midnight build

branches:

include:

- master

pool:

vmImage: 'ubuntu-latest'

variables:

buildNumber: 0.0.$(Build.BuildId)

steps:

- task: Docker@2

inputs:

containerRegistry: 'pmorelli92-docker-hub'

repository: 'pmorelli92/go-with-azure'

command: 'buildAndPush'

Dockerfile: '**/Dockerfile'

tags: |

$(buildNumber)

latest

- bash: sed -i "s/latest/$(buildNumber)/g" kubernetes/k8s-deployment.yml

- task: Kubernetes@0

inputs:

kubernetesServiceConnection: 'pmorelli92-okteto'

namespace: pmorelli92

command: apply

arguments: '-f kubernetes/k8s-deployment.yml'

Let’s split them:

trigger:

- master

Associated on which branch the CI/CD will execute.

schedules:

- cron: "0 0 * * *"

displayName: Daily midnight build

branches:

include:

- master

Some projects have another way of triggering builds, for example nightly builds. In this case, the pipeline is triggered all days at midnight and this is because otherwise Okteto takes down applications after 24 hours without use.

pool:

vmImage: 'ubuntu-latest'

Relates to the machine that will execute the commands.

variables:

buildNumber: 0.0.$(Build.BuildId)

Global variables that are going to be used on the pipeline. In this case, the example repository is using semver for building versions.

steps:

Represents a chain of commands that will be executed in order. This is not the full power of pipelines, since it supports a higher order hierarchy where you can specify stages -> jobs -> steps.

- task: Docker@2

inputs:

containerRegistry: 'pmorelli92-docker-hub'

repository: 'pmorelli92/go-with-azure'

command: 'buildAndPush'

Dockerfile: '**/Dockerfile'

tags: |

$(buildNumber)

latest

This is a built-in command of pipelines, where we can (among other things) build and push docker images.

The input named repository indicates the name of the repository on the container registry (which was set up on the project settings).

If this command fails, the other ones will not be invoked.

bash: sed -i "s/latest/$(buildNumber)/g" kubernetes/k8s-deployment.yml

A discussion could be hold on whether if a service should self contain information of deployment or be agnostic of it. As a personal preference, I think having everything related to the application on the same repository enables easier discoverability.

Of course, we are not having any secrets here, if that was the case, Azure Pipelines offers a way to store them safely inside the azure devops project.

That being said, the repository contains a file

kubernetes/k8s-deployment.yml which describes:

- Deployment (information of the containers to be run)

- Service (enables communication from pods)

- Ingress (enables communication from the outside world to the service)

The deployment needs to specify which version of the docker image is going to be run:

spec:

containers:

- name: gwa

image: pmorelli92/go-with-azure:latest

ports:

- name: http

containerPort: 80

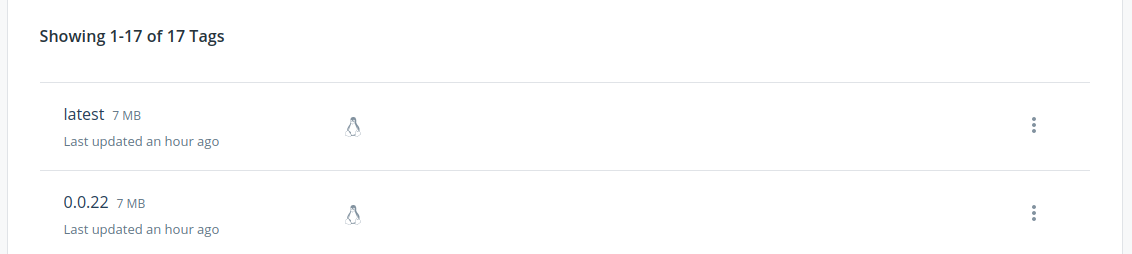

It is definitely not good to use :latest image on stage / production so the sed script will replace :latest with 0.0.X (which will be the build number). This is also the same version that will be pushed to the selected docker registry: example

.

- task: Kubernetes@0

inputs:

kubernetesServiceConnection: 'pmorelli92-okteto'

namespace: pmorelli92

command: apply

arguments: '-f kubernetes/k8s-deployment.yml'

Finally, kubernetesServiceConnection is for indicating the connection created on the project settings; the namespace refers to a kubernetes namespace, and the command combined with arguments will be the equivalent of: kubectl apply -f kubernetes/k8s-deployment.yml

This will create / update the specifications that are living on the folder or file; in this case: the deployment, service and ingress.

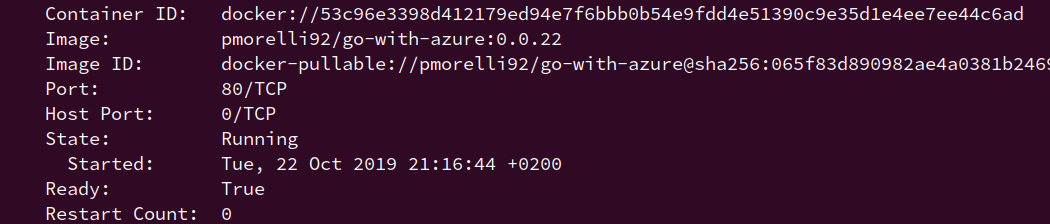

If everything goes green, we can check that the pod version matches the latest one added on the docker registry.

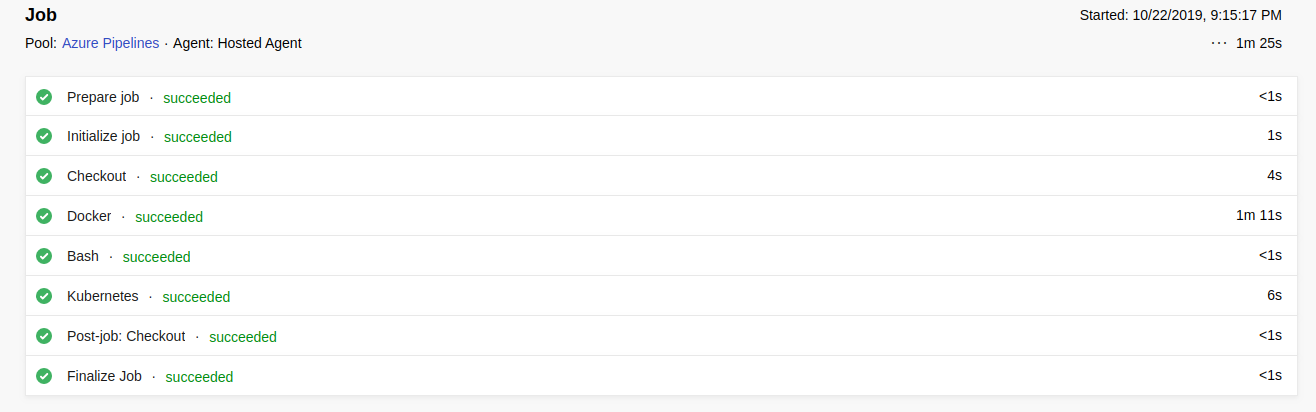

The logs should display something like this:

And the demo app is now accessible from the following link .

Summary

In this tutorial we have seen how easy it is to set up a quick CI/CD pipeline that could be improved further according to the business / technical needs. If I were to give a verdict on Azure Pipelines:

Pros

- Provides support for CI/CD.

- Component of Azure Devops which also supplies: git repos, agile boards and reports.

- Free to start, specially for startups.

- Documentation is clear and updated.

- Backed by Microsoft.

Cons

- Vendor lock in with some commands, that would need to be refactored in order to change CI/CD provider.