14 minutes

How I approach microservice testing in 2023

👉 The codebase used in this post can be found in my Github. 👈

Introduction

As a software engineer, I’ve had the opportunity to work with microservices in various companies and each one had its own unique approach to testing the codebase. From my experience, I’ve come to realize that there’s no one-size-fits-all solution when it comes to testing microservices. Should there be only unit test? What about the external dependencies? Do we care about what happen if a third party returns a response that is not expected? Is contract testing needed? So, what do we do?

In this post the microservice used as example will have the following traits:

- Entrypoint as REST API.

- Domain logic that defines the business rules of the service.

- A connection to a database for data persistence.

To keep it simple, there will not be any other entrypoint and there are no integration events. Additionally, before diving into the specifics of testing microservices, it’s important to understand what microservices are and the different types of tests that are available. We’ll start by discussing those.

Microservice definition

There are a lot of definitions, but the one I always choose to go with is written by Sam Newman and is quite fitting:

Microservices are small, autonomous services that work together. […] A Microservice is a stand-alone service that can be (re)built in no more than two weeks.

Given the fast-paced nature of microservices development, it’s essential to have tests that can quickly provide confidence in the codebase. One starting point is to focus on the Pareto principle, which states that 80% of the results come from 20% of the effort. This means that it’s not necessary to test every single aspect of a microservice, but rather to focus on the areas that will provide the most value, in other words, aim for the good enough.

It’s also important to note that there is no holy grail approach to testing microservices. Different teams may have different testing strategies that work best for them, depending on their domain and the specific needs of their project. Some teams may find that using TDD (Test-Driven Development) practices works well, while others may find that it’s not the best fit. The key is to find a testing strategy that works for your team and your specific microservices project otherwise you can end incurring in wasting time.

Different type of tests

Testing is an essential part of software development and there are several types of tests that can be used to ensure the quality of your code, for example:

Unit tests: These tests focus on individual units of code, such as single functions or methods. They are designed to test code in isolation, without the need for external dependencies. However, this approach can be less effective when dealing with the mentioned, such as a database or other service:

func (s Service) CreatePost(ctx context.Context, userID string) error {

user, err := s.repository.GetUser(ctx, userID)

if err != nil {

return err

}

...

}

In those cases, unit tests drastically loses the effectiveness, as you will need to mock these dependencies which in most cases requires working with interfaces, that in return is going to add complexity to your codebase.

Integration tests: These tests focus on the interaction and exception handling between different components. They should not aim for testing business logic as that should be covered with the unit tests. There might be an overlap, but the intent is different.

Acceptance tests: Used to ensure that the system meets the criteria specified by stakeholders and the development team. These could include functionality such as allowing a user to create a post or manage their profile on a social media platform.

Behavioral tests: Test the behavior of the system by simulating user or system interactions. These tests can be used to ensure things such as usability.

In reality, it’s hard to separate these types of tests completely and they often overlap. However, it’s important to understand the different purposes and what is the intention of each type of test. If you take for instance an acceptance test that checks whether a user can create a post we can see that it will also share traits of a unit test, integration test, and behavioral test.

Unit test: The test will likely call domain functions that validate the post content, such as a blacklist filter. These functions should be pure and testable in isolation, but they still need to be valid for the acceptance test to pass.

Integration test: The post needs to be stored somewhere, such as in a Postgres database. This requires testing that the communication between the service and the database is working properly. If an integration with in-house service is required, one can use containers that return stub data to simulate that service with tools like Mockaco or grpc-mock . This ensures that the mocks are not part of the codebase and avoids creating mock interfaces.

Behavioral test: The entry point of the test is the POST /post endpoint, simulating a user interaction.

With that stated, it can be difficult or undesirable to isolate one specific aspect in practice. In most cases then, I found it useful to refer to my tests as acceptance tests, which assert against use cases or features such as:

- Allowing the user to create a post.

- Allowing the user to remove a post.

- Allowing the user to like a post.

It is possible that these tests do not cover 100% of the domain rules or third-party integrations. In that case, it may be necessary to supplement them with more specific unit tests or integration tests, or to make the call on whether the level of confidence in the codebase is good enough.

Confidence versus coverage

In this post, we’ve used confidence as a measure of our codebase instead of coverage. Confidence refers to the sense of security one feels when working on a codebase and making changes. It’s that feeling of I know this is going to work. Good coverage is one way to achieve confidence, but it can also be achieved through sound coding practices.

On the other hand, coverage refers to the percentage of the codebase that is executed while running the test cases. However, high coverage does not necessarily indicate that the codebase is reliable if the assertions in the test cases are incorrect. A test suite with only the assertion of true = true will execute successfully, but it won’t provide any real assurance that the codebase is functioning as intended.

GWT approach

The Given-When-Then (GWT) approach is a way to write test cases that is based on behavior testing. This method can help to make tests more readable and allow for the reuse of preconditions and assertions.

In the case of creating a post, there are some preconditions that must be met. For example, a user must have an account and the post cannot contain blacklisted words. The GWT approach can be used to test these scenarios:

Scenario 1: User can create a post

- Given the user has an account.

- When the user makes a post via

POST /post. - Then the HTTP response should be 200 and the post should be stored in the database.

Scenario 2: User should not be able to create a post with blacklisted words

- Given the user has an account.

- When the user makes a post with blacklisted words.

- Then the user should receive an error message.

In a real-world microservices scenario, there would likely be an additional step where a post.created integration event is fired, and this is something that can be asserted on. However, for the sake of simplicity, this step has been omitted in the example provided.

How does this look in the codebase?

func TestCreatePost(t *testing.T) {

...

t.Run("Create post without blacklisted terms", func(t *testing.T) {

arg := testCreatePost{

db: db,

HTTPAddress: cfg.HTTPAddress,

userID: uuid.NewString(),

content: "some text without blacklist",

}

NewCase[testCreatePostResult](ctx, t).

Given(arg.userExists).

When(arg.userCreatesPost).

Then(arg.responseShouldBeStatus201).

Then(arg.postShouldExistsOnDatabase).

Run()

})

t.Run("Create post with blacklisted terms", func(t *testing.T) {

arg := testCreatePost{

db: db,

HTTPAddress: cfg.HTTPAddress,

userID: uuid.NewString(),

content: "some text with foo",

}

NewCase[testCreatePostResult](ctx, t).

Given(arg.userExists).

When(arg.userCreatesPost).

Then(arg.responseShouldBeStatus400).

Then(arg.postIDShouldBeEmpty).

Run()

})

t.Run("Create post for non existing user", func(t *testing.T) {

arg := testCreatePost{

db: db,

HTTPAddress: cfg.HTTPAddress,

userID: uuid.NewString(),

content: "some text for non existing user",

}

NewCase[testCreatePostResult](ctx, t).

Given(arg.userDoesNotExists).

When(arg.userCreatesPost).

Then(arg.responseShouldBeStatus400).

Then(arg.postIDShouldBeEmpty).

Run()

})

}

The codebase

, as shown in the snippet above, lays out the GWT approach in an easy to follow manner. If you’re interested in learning more about how this approach is implemented, you can take a deeper dive in the case.go file

.

Codebase

It is organized in a way that makes it easy to understand and navigate. Here’s a breakdown of the main directories and files you’ll find in the codebase:

.github: This directory contains Github actions that automatically execute acceptance tests using the Makefile every time there is a pull request or a push to the master branch.

accept_test: This directory contains the acceptance tests that will test the application from outside after starting the service using the app package.

app: This is where the application’s configuration and wiring are defined.

cmd: The entrypoint of the application, which simply calls the app package.

database: This directory contains the database migrations.

features: This directory contains one folder for each feature of the application. In this case, the only feature is create_post, but there could be more such as remove_post, like_post, share_post, etc.

Dockerfile.test: This file defines the container used to start the app and run the acceptance tests on it.

docker-compose-test.yaml: This file builds and executes the container defined in Dockerfile.test.

docker-compose.yaml: This file defines the application’s dependencies, in this case only Postgres.

local.env: This file contains default environment variables.

Makefile: This file contains instructions for running acceptance tests locally and with Docker.

Acceptance test

The entrypoint for all the tests is a simple accept_test/main_test.go file. It starts by launching a go routine that runs the application code.

Therefore the tests will serve the stimuli from the outside such as with REST API calls or consuming events.

func TestMain(m *testing.M) {

go app.Run()

readinessProbe()

os.Exit(m.Run())

}By executing the same code that the application runs during normal operation, we can increase our confidence in the codebase, such as parsing environment variables and any initial startup code. Below you can visualize how similar the actual main.go is in comparison:

func main() {

app.Run()

}

One key aspect of this main acceptance test is the use of a readinessProbe() function. Currently, it simply wraps a time.Sleep call, but it should be updated to use an exponential retry policy to verify that the service is up and running before running the actual test suite. This ensures that the acceptance test only runs when the application is fully ready to handle incoming requests.

Overall, acceptance testing is a powerful way to ensure that your code is working correctly in the context of the entire system, and can help you catch issues before they make it to production.

The case.go file that is also on the folder contains a helper structure that is used to implement the Given-When-Then approach in the tests. As mentioned before, this helps to make the tests more readable and easier to understand.

Lastly, you can find the create_post_test.go file utilizing a common pattern in Go testing where the main test function (in this case TestCreatePost) runs multiple sub-tests using the t.Run() function. Each sub-test is a separate function that will be run independently and only the failed sub-tests will be reported. This allows for better organization and more granular test failure reporting. The sub-tests are named in a way that describes the scenario being tested, making it easy to understand the purpose of each test. In this example, the code is testing different scenarios for the feature/create_post.

The idea of having all feature related concerns under the same folder is to enable easy discovery and extension, knowing that what it is touched should not affect other features. Of course this has its drawbacks such as code duplication (over bad abstractions) and it is not a silver bullet, but rarely something is.

The sub tests are then organized in the following way:

func TestCreatePost(t *testing.T) {

t.Run("Create post without blacklisted terms", func(t *testing.T) {

})

t.Run("Create post with blacklisted terms", func(t *testing.T) {

})

t.Run("Create post for non existing user", func(t *testing.T) {

})

}

However, one of the most attractive features of this architecture is the ease of running and debugging tests locally. The video below demonstrates this capability in action.

This is made possible by two main factors:

- The use of a

local.envfile that contains default, non-sensitive values required to run the service. - The configuration of VS Code to read these values for running and debugging tests in the

settings.jsonfile:

{

"go.buildTags": "integration,accept",

"go.testEnvFile": "${workspaceFolder}/local.env",

}

Additionally, if you wish to run the code without executing tests, the .vscode/launch.json file is also configured to support the environment file:

{

"version": "0.2.0",

"configurations": [

{

"name": "Debug locally",

"type": "go",

"request": "launch",

"mode": "debug",

"program": "${workspaceFolder}/cmd/main.go",

"envFile": "${workspaceFolder}/local.env"

}

]

}

If you prefer to work outside of the IDE, you can still run the code and tests from the terminal by prefixing the commands with env $(cat local.env):

env $(cat local.env) go run cmd/main.goenv $(cat local.env) go test -tags=accept --count=1 ./accept_test/...

The app package does the majority of the work, such as parsing the environment and starting the service, making it easy to ensure everything is wired up correctly with minimal effort.

What about CI?

Setting up your continuous integration (CI) pipeline is a breeze

with this architecture. Because all of the logic is housed within the service itself, there’s no need to deal with complex configurations. This also means that you can easily run the same commands that your CI system is executing on your local machine. In this case, the only command you need to run is make test/docker.

This command performs two key tasks:

- It composes and builds dependencies (in this case Postgres) and a

golang-alpinecontainer with the code and tests, then proceeds to run both. - It attaches to the container running the tests to check the exit code and prints results, such as coverage.

The actual command that runs the tests is located in the Dockerfile.test, here is the important part:

CMD while ! nc -z $DB_HOST $DB_PORT; do sleep 1; done; \

CGO_ENABLED=0 go test -tags=accept ./accept_test/... \

-coverprofile=test.coverage.tmp \

-coverpkg=$(go list ./... | paste -sd ',' -) ./... \

&& cat test.coverage.tmp | grep -v '_test.go' > test.coverage && \

go tool cover -func test.coverage.tmp

Taking it slowly these bunch of lines do the following:

- Waits for Postgres to be up and running before executing the tests. There’s no point in running the tests before the dependencies are ready.

- Executes the tests, including those marked with

//go:build accept, and measures the coverage. - Excludes test files (those with

_test.goin their name) from the coverage report, as there’s no need to see the coverage of our test files. - Prints the coverage report.

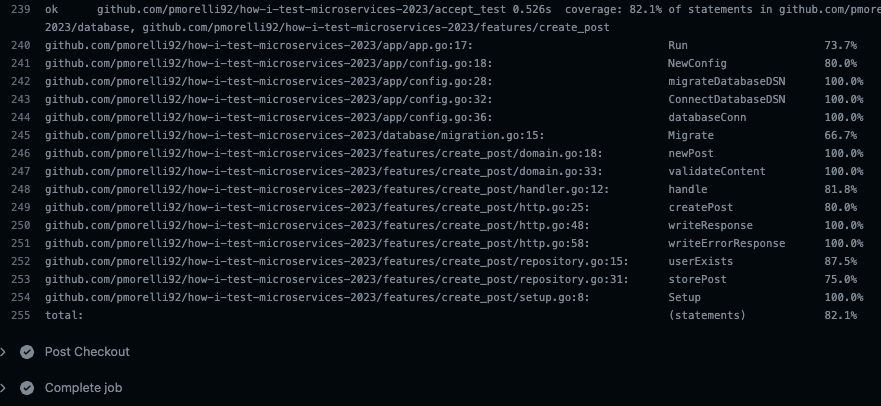

The image above is from the CI, but you can run the same command on your local machine by simply running make test/docker. This makes it easy to test and debug your code locally before pushing to the CI pipeline.

Fight against repetition

A microservice architecture can lead to a lot of repetitive work, especially when it comes to managing files like Dockerfile, Makefile, and docker-compose.yaml across hundreds of repositories. This can be a real headache when it comes to making changes. To tackle this issue, I highly recommend checking out shuttle

. This open-source tool, developed by Lunar

, is designed to simplify these scenarios. With shuttle, you can keep all of these files, that are prone to change together in all places at the same time; in a central repository, and then have other repositories pull them in before executing their scripts. This way, you’ll be able to streamline your workflow and make updates much more efficiently.

Things to keep in mind

When it comes to testing microservices, there is no one-size-fits-all solution. Different types of services, teams, and organizational structures require different testing approaches. What works for one team or company may not work for another.

I’ve found a nice consensus on testing codebases with acceptance tests as the team is able to deliver microservices with quality and without sacrificing velocity.

It’s important to keep in mind that testing is not just about the codebase, but also about the people who will be using it. Therefore, it’s important to consider the team’s needs and goals when implementing any changes.

Before making any sudden changes, it’s important to do your own research and steer in a direction that benefits the team as a whole, rather than just an individual. This way, you can ensure that your testing approach aligns with the specific needs and goals of your team and organization.

microservice architecture test acceptance integration unit behavior service go vscode docker

2826 Words

2023-01-29 20:30 +0100